The rise of artificial intelligence has unleashed a super charged market FOMO at every scale possible. Every startup, major tech company, and government agency is competing to build better models, cleaner datasets, and faster labeling pipelines, all of which require expertise from real people.

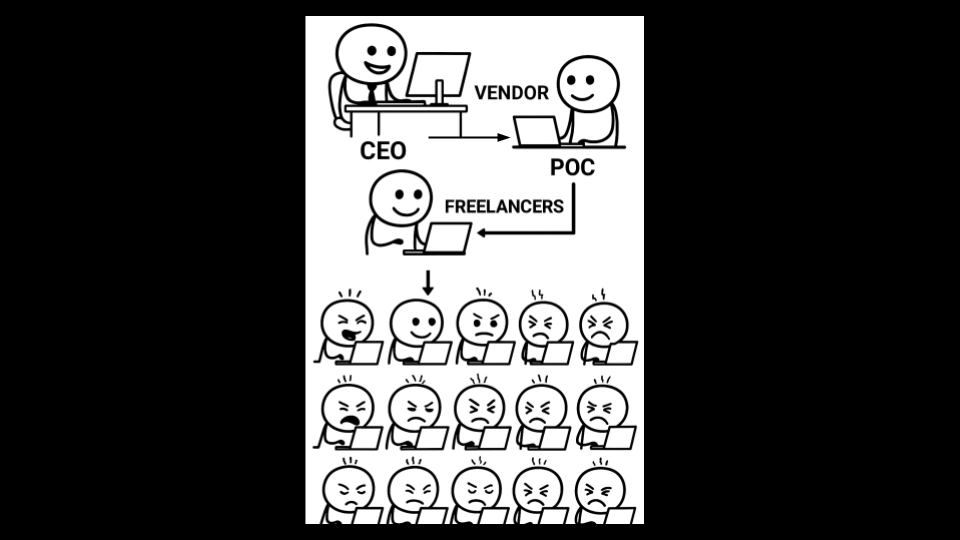

This emerging “AI gig economy” mirrors the labor structure of rideshare companies like Uber: flexible on the surface, but exploitative in practice. Workers are atomized, underpaid, and stripped of bargaining power, all while producing the data that makes AI systems possible. Annotation platforms rely on opaque quality-scoring algorithms and one-sided feedback loops, creating conditions nearly identical to those faced by app-based drivers and couriers.

In many exchanges from headhunters, recruits get the opportunity to annotate as a form of employment, which it is not, and so are asked to not take other employment opportunities despite the lack of an exclusivity contract.

If we take OpenAI’s self-attested worth—valued at roughly $500 billion as of October 2025 —and compare it with its projected $12 billion in annual revenue, we can surmise that the data-annotation and human-feedback pipelines underpinning such growth are a multi-billion-dollar accelerant within the trillion-dollar AI economy. These invisible human systems transform raw data into intelligence, serving as the bridge between massive corporate valuations and the cognitive labor quietly sustaining them.

Data Annotation as the New Rideshare

Just as Uber drivers provide transportation without traditional employment protections, AI gig workers provide linguistic, visual, and behavioral data without recognition or fair pay. Tasks range from labeling toxic content to fine-tuning model responses to complex prompts.

The shift is palpable: Uber itself has entered this space via its division called “Scaled Solutions”, where it is recruiting contractors around the world to perform image, video and text annotation for internal and external clients. Meanwhile, Mercor has built a marketplace that connects industry-experts and gig-contractors to train AI models, framing this work as a “new category of work”. These labour models repurpose the logic of flexible gig work into the cognitive realm with some of the same controversies: delays in pay, people burned out from prior promises, and contradictory functions when on deployment.

Algorithmic Management and Worker Precarity

The same algorithmic control that governs Uber drivers’ acceptance rates and surge pricing is now being deployed in AI gig platforms. Annotators are monitored through time-tracking software, browser telemetry, and keystroke analysis. “Quality” and “task completion” scores determine whether they receive future work. Unlike traditional employment, there are no appeals, HR channels, or labour protections. A sudden account suspension can mean losing one’s entire income stream.

In the case of Mercor, for example, the company now pays “over $1.5 million a day” to thousands of human contractors, according to its CEO. While this may sound generous on its face, the structure remains gig-contractor based—no guaranteed wage, no long-term stability. The ratios likely amounting to less than a few hundred per worker.

A False Promise of Flexibility

Gig platforms sell the illusion of autonomy: “Work when you want.” But the reality is closer to algorithmic and diffuse management dependency. Workers must constantly check dashboards or chats or emails to rally for annotation work via the available micro-tasks, racing from dozens to thousands of others to claim them. This pseudo-flexibility is reminiscent of Uber drivers waiting for rides in oversaturated markets—technically free, economically trapped. The psychological stress of unstable income, combined with the cognitive load of repetitive data labeling, produces burnout that mirrors physical gig labour.

Global Wage Arbitrage and Digital Colonialism

By decentralising cognitive labour, AI companies are engaging in what many scholars call digital colonialism: extracting intellectual and emotional labour from the Global South to train models that enrich corporations in the Global North. Workers may, for example, in the Philippines, Kenya, or Latin America earn wages far below local living-standards while doing labeling tasks that power global models. (Surveys show many gig-annotators still earn less than minimum wage equivalents once issues like task rejections are factored in.)

The Ironic Feedback Loop

Ironically, the very models being trained by underpaid gig workers are now being used to automate those same jobs. Reinforcement learning from human feedback (RLHF) depends on human annotators to teach models ethical and contextual reasoning—but as models improve, they threaten to displace the annotators themselves. This recursive dynamic mirrors earlier waves of automation in manufacturing and logistics but now targets cognitive and linguistic skills once considered “automation-proof”. Below, we spotlight some of the crazy risks and profiles associated with the leading vendor annotation managers out in the market.

Company Spotlights

Mercor

Founded in 2023 and headquartered in San Francisco, Mercor describes its business as “connecting human expertise with leading AI labs and enterprises that drive the AI economy”. Mercor+2TechCrunch+2 The company recruits former senior employees in fields such as law, banking, and consulting, paying them hundreds of dollars an hour to write reports and fill out forms to train models. MEXC

Why this matters: This model scales the gig economy deeper—from low-skill annotation tasks to high-skill “expert contract work” for AI. It shows that any human cognitive work is becoming commoditised.

Risk: The gig-labor framing dismantles traditional employment protections, shifting risk and cost to individuals while firms capture value.

Uber (Scaled Solutions)

Uber has announced its division “Scaled Solutions” which, leveraging its existing gig-work infrastructure, provides data annotation and model-training services via its global workforce of over 8 million earners across 72 countries. Uber is using the same model that managed drivers and couriers to now manage AI-labelling contractors.

Why this matters: Conflating gig-drive-work infrastructure with data-annotation work shows how the gig economy logic is migrating into knowledge work.

Risk: Gig work conditions—low bargaining power, global competition, algorithmic scheduling—are imported into AI workforce.

Meta (via Scale AI investment)

Meta’s transformative investment: In June 2025, Meta announced a $14.3 billion investment to acquire a 49 % stake in Scale AI (valued at ~US$29 billion), a major data-annotation firm. Scale’s business depends on gig/contract annotators for human-in-the-loop training. A recent TIME article points out that despite the enormous investment, workers behind the labeling may see no improvement in conditions.

Why this matters: Meta treating annotation infrastructure as a strategic asset signals how labour at scale is now central to AI leadership, not just algorithms or compute.

Risk: When data-work is central, the drive to scale and minimise cost can lead firms to treat human labour as a cost centre—not as human labour. The Meta-Scale ecosystem intensifies competition among annotation firms, driving downward pressure on wages and standards.

The Race to the Bottom

Ultimately, this is a race to the bottom for what was once respectable and highly paid knowledge work. When firms optimise for ultra-flexible, on-demand cognitive labour, a race to the bottom emerges:

- Wages are set task-by-task, often without clear hourly guarantee.

- Global competition drives local wage erosion.

- Few protections against rejection or algorithmic suspension.

- Quality demands escalate while pay remains stagnant or falls.

- Labour becomes de-skilled and commoditized—what once required specialised knowledge becomes micro-tasked and outsourced.

As one newsletter puts it: “Jack of All Trades, Master of None: The Data Annotation Gold Rush” — describing how annotation platforms are racing workers through ever-cheaper tasks, even as models demand more nuance and skill.

Toward an Ethical AI Labour Framework

If this trend continues unchecked, AI development will replicate the worst aspects of the gig economy: opacity, precarity, and exploitation disguised as opportunity. To counter this, the AI industry must embrace ethical labour standards, including:

- Transparent pay-structures tied to regional living wages, not just global bidding.

- Collective representation for annotators, linguists and model-trainers.

- Data-labour rights: recognition and perhaps royalty or profit-share for human contributions to model development.

Governments and tech companies alike must treat AI labour as essential infrastructure—and not as disposable digital piece-work.